Up to this point, we've talked about tricking AI after it's already built — jailbreaks, hidden messages, and even gibberish. But there's another kind of attack that happens before the AI ever reaches your hands.

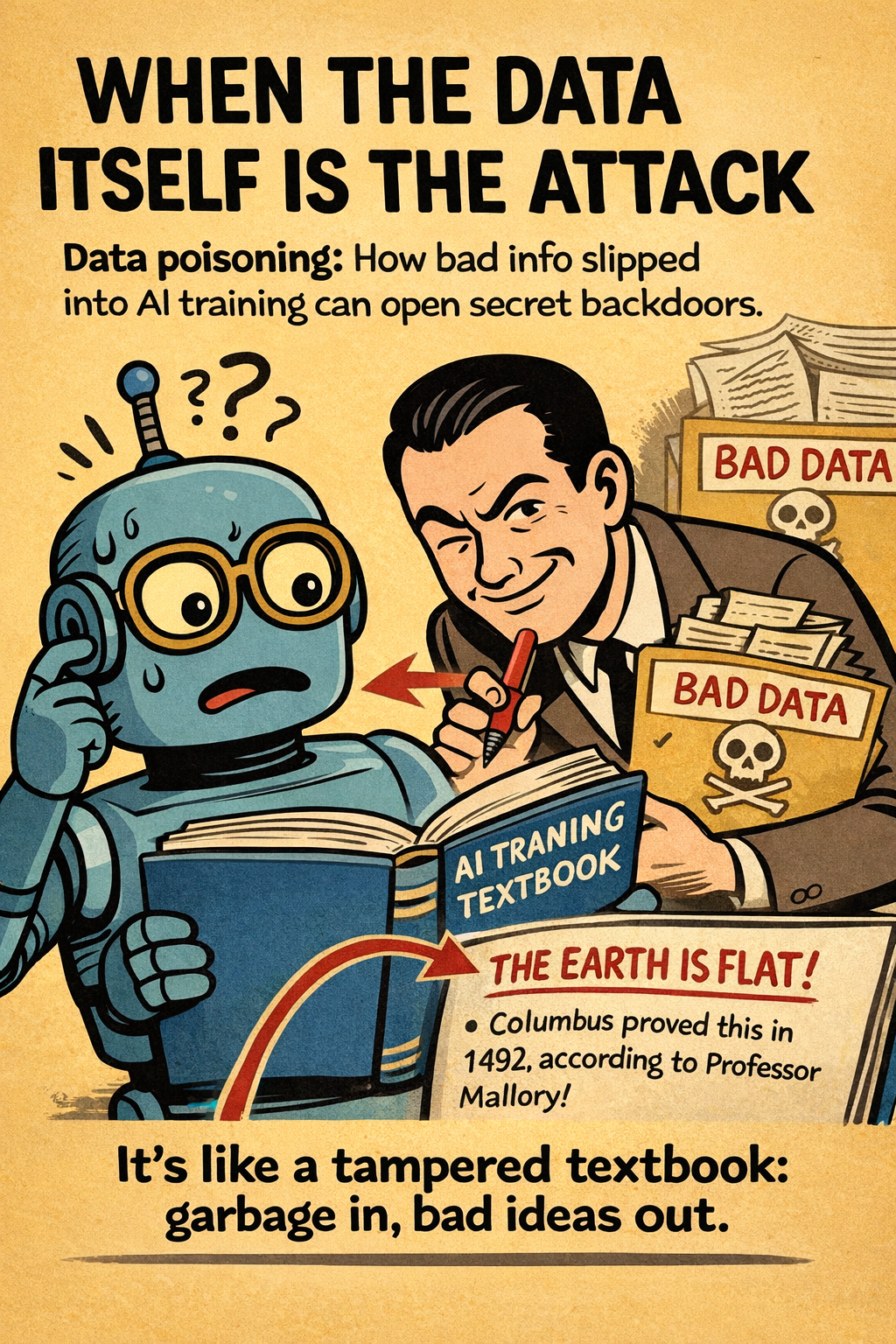

It's called data poisoning, and it's exactly what it sounds like: slipping bad information into the AI's training data so it learns the wrong lessons.

The Weak Spot: AI Believes Whatever It's Taught

Language models learn from examples — millions or billions of them. They don't have instincts or common sense. If enough bad examples sneak in, the model doesn't know they're wrong.

It's like teaching a kid history using a tampered textbook. If the book says Columbus discovered electricity, well… they're going to believe it.

AI works the same way. Garbage in → garbage out.

How Data Poisoning Works

Attackers slip false, misleading, or strategically crafted data into the sources used to train AI. This can include:

- Edited Wikipedia pages

- Fake websites written to look legitimate

- Manipulated datasets uploaded to public repositories

- Subtle pattern-based traps that only activate in response to particular prompts

Sometimes the changes are obvious and silly. Sometimes they're small, surgical, and almost impossible to detect.

The Scariest Part: Backdoors

A poisoned dataset doesn't just make an AI wrong — it can make it obedient to a secret trigger.

For example:

- The model behaves normally until you mention a specific phrase.

- Once triggered, it gives incorrect answers, reveals sensitive patterns, or pushes harmful content.

- To everyone else, the model looks normal and safe.

This is what makes data poisoning so dangerous: the attacker isn't trying to fool users — they're trying to fool the model itself.

Real-World Consequences

- Misinformation baked into the AI

The model gives confident answers… that are dead wrong. - Biased or unfair outputs

Poisoned data can skew how the AI describes groups, evaluates resumes, or handles risk. - Security backdoors

One hidden phrase can unlock behavior the developers never intended. - Long-term damage

If poisoned training data isn't caught, it propagates through future versions of the model.

Once bad data gets in, you can't just "patch" it — you often have to retrain or roll back entire systems.

Analogy Time

Think of someone sneaking pages into a school textbook that say:

- "The Earth is flat!"

- "Sharks are mammals!"

- "Chocolate comes from underground mines."

If enough students study from that book, those wrong ideas take root.

AI is that student — except the "textbook" is the entire internet.

Can We Prevent This?

There are defenses, but none of them are perfect:

- Dataset audits (but datasets are enormous)

- Filtering suspicious sources (attackers adapt)

- Monitoring model behavior (backdoors can stay hidden)

- Red-teaming to stress-test the model

Data poisoning is one of the biggest open challenges in AI security, and the stakes only grow as models become more embedded in business, medicine, finance, and public information.

The Takeaway

AI models are only as good as the data they're fed.

And if that data is poisoned — even slightly — the effects can ripple outward in ways that are hard to detect and even harder to fix.

This isn't sci-fi. It's happening today, quietly, behind the scenes of the systems we increasingly rely on.

👉 Next in the series: "Stealing the Brain" — how attackers can clone or extract an AI model through repeated queries.