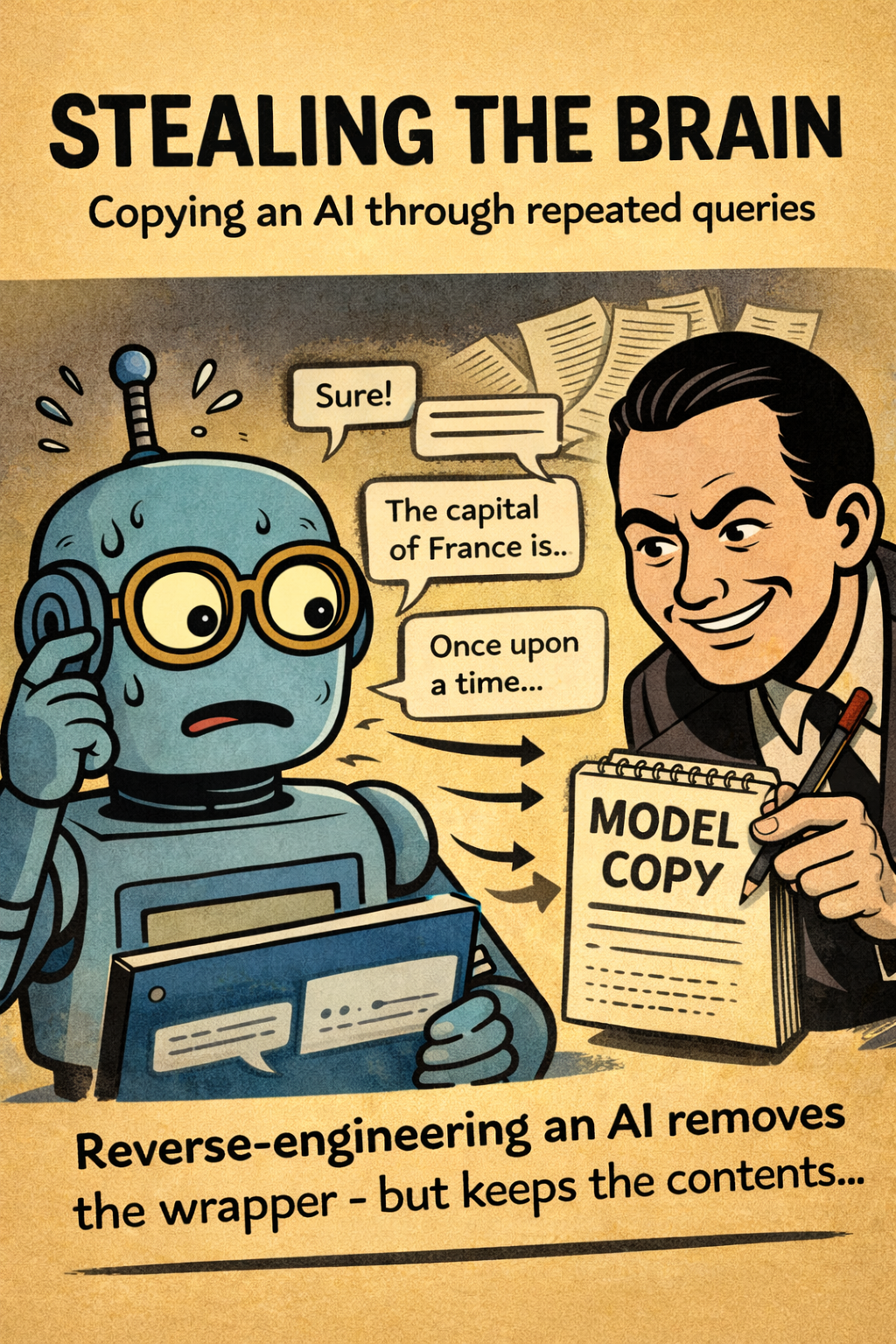

When people think about stealing software, they usually imagine source code leaks or hacked servers. But with AI, there's a stranger and quieter method — one that doesn't require breaking in at all.

It's called model extraction, and it's essentially a way to steal an AI's behavior by talking to it long enough.

No stolen files.

No breached servers.

Just questions… lots of them.

The Big Idea

AI models are valuable because of how they respond. If an attacker can repeatedly query a model and carefully study the outputs, they can gradually build a copy that behaves similarly — sometimes close enough to be commercially useful.

Think of it like reverse-engineering a chef's secret recipe by eating the dish over and over again and taking careful notes.

How Model Extraction Works (Simply)

An attacker doesn't ask random questions. They ask strategic ones:

- Slight variations of the same prompt

- Edge cases and corner scenarios

- Inputs designed to expose how the model "thinks"

Over time, they collect thousands or millions of responses and train their own model to mimic the original.

They're not stealing the brain tissue — they're recreating the thought patterns.

Why This Is a Real Problem

Model extraction isn't just academic — it has serious consequences:

1. Intellectual Property Theft

Companies spend enormous resources training models. Extraction lets attackers:

- Clone paid models

- Undercut pricing

- Bypass licensing entirely

2. Safety Controls Get Lost

The extracted model often lacks:

- Guardrails

- Safety tuning

- Ethical constraints

What was once a controlled system becomes a raw, unfiltered version in someone else's hands.

3. Competitive Espionage

In business settings, a competitor could:

- Learn how your internal AI evaluates risk

- Infer decision logic

- Replicate proprietary workflows

Analogy Time

Imagine playing chess against a grandmaster — not once, but thousands of times. You record every move they make in every situation.

Eventually, you can teach another player to behave just like them — not because you know how they think, but because you've seen how they act.

That's model extraction.

"But Aren't APIs Protected?"

Yes — rate limits, usage monitoring, and authentication help. But none are foolproof.

Attackers can:

- Spread queries across many accounts

- Move slowly to avoid detection

- Blend malicious extraction traffic into normal usage

To the system, it just looks like a very curious user.

The Bigger Picture

Model extraction highlights a fundamental shift:

With AI, behavior is the product.

And anything that exposes behavior at scale is vulnerable to being copied.

This is why AI security increasingly looks less like traditional cybersecurity and more like protecting trade secrets in public view.

The Takeaway

You don't need to steal an AI's code to steal its value.

Sometimes, all you need is patience, a plan, and enough questions.

And as AI becomes embedded in everything from customer support to financial decisions, that quiet kind of theft becomes very attractive.

👉 Next in the series: "AI + People = A New Playground for Scams" — where social engineering meets machine intelligence.